GOAP is a state based approach to automated planning and decision making, which delivers highly flexible and performant results

STRIPS

Strips is an acronym for STandford Research Institute Problem Solver.

GOAP (Original)

GOAP is an implementation of STRIPS algorithm created specifically for the first person shooter game FEAR

GOAP requires that NPCs in the game be described with

- Actions - Things that NPCs can do

- Preconditions - State conditions required before an action can be undertaken

- Costs - The cost of performing an action

- Effects - The end result to the environment, if the action occurs

- Goals

- Finishing conditions for a character’s plans

Implementations include:

- https://github.com/cpowell/cppGOAP

- https://www.unrealengine.com/marketplace/en-US/product/goap-npc-goal-oriented-action-planning-for-non-player-characters

- https://github.com/GarrenSouza/GOAPFramework

- https://github.com/stolk/GPGOAP

A* Algorithm

The heart of the GOAP implementation is the A* (A star) algorithm which finds the path of least cost, in a highly performant heuristic search. Path of least cost is distinct to shortest path as the resulting path is based on a graph traversal with edge costs.

- https://www.redblobgames.com/pathfinding/a-star/introduction.html (Recommended read)

- http://theory.stanford.edu/~amitp/GameProgramming/ImplementationNotes.html

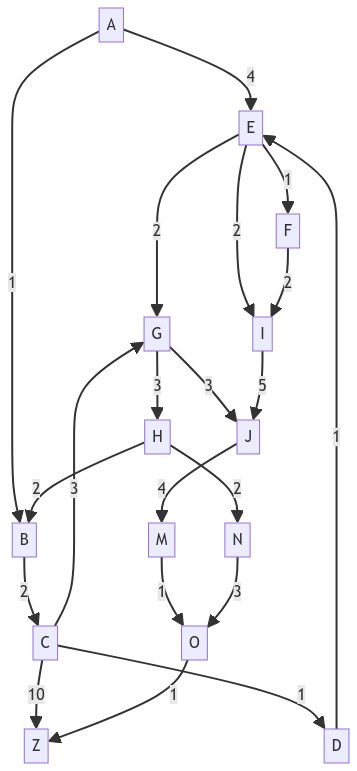

Worked example of A* Algorithm

How to get from A-Z using the A*star algorithm

First we define a type to store the graph representation

class Graph():

def __init__(self):

self.start = None

self.nodes = {}

def add(self, id, neighbors, costs):

assert len(neighbors) == len(costs), \

'Expect all neighbors to have one cost'

node = {

'id': id,

'neighbors' : neighbors,

'costs' : costs

}

self.nodes[id] = node

def cost(self, from_id, to_id):

node = self.nodes[from_id]

if to_id in node['neighbors']:

return [c for n,c in zip(node['neighbors'], node['costs']) if n == to_id][0]

return [99]

def neighbors(self, from_id):

node = self.nodes[from_id]

return node['neighbors']

def edges(self, from_id, to_id, depth = None):

if(depth == None):

depth = len(self.nodes.keys())

if depth > 0 and from_id in self.nodes.keys():

node = self.nodes[from_id]

if to_id in node['neighbors']:

return 1

distances = [(n, self.edges(n, to_id, depth - 1) + 1) for n in node['neighbors']]

distances = sorted(distances, key = lambda d: d[1])

return distances[0][1]

else:

return 99

Then we define the graph instance

graph = Graph()

graph.add('A',['E','B'],[4,1])

graph.add('B',['C',],[2,])

graph.add('C',['D','G','Z'],[1,3,10])

graph.add('D',['E',],[1,])

graph.add('E',['G','I','F'],[2,2,1])

graph.add('F',['I',],[2,])

graph.add('G',['J','H'],[3,3])

graph.add('H',['B','N'],[2,2])

graph.add('I',['J',],[5,])

graph.add('J',['M',],[4,])

graph.add('M',['O',],[1,])

graph.add('N',['O',],[3,])

graph.add('O',['Z',],[1,])

We define the start and goal nodes, and a heuristic which is the effective estimate of the distance between a point and the goal. In a geographic example, this would be a measure in euclidean distance, eg. metres, kilometres, etc.

start = 'A'

goal = 'Z'

heuristic = lambda goal, next : graph.edges(next, goal) * 10

Finally we assess the graph, with the A* algorithm

frontier = PriorityQueue()

frontier.put(start, 0)

came_from = dict()

cost_so_far = dict()

came_from[start] = None

cost_so_far[start] = 0

while not frontier.empty():

current = frontier.get()

if current == goal:

break

for next in graph.neighbors(current):

new_cost = cost_so_far[current] + graph.cost(current, next)

if next not in cost_so_far or new_cost < cost_so_far[next]:

cost_so_far[next] = new_cost

priority = new_cost + heuristic(goal, next)

frontier.put(next, priority)

came_from[next] = current

The path search results are stored in came_from as follows:

print(came_from)

{'A': None,

'B': 'A',

'C': 'B',

'D': 'C',

'E': 'A',

'F': 'E',

'G': 'C',

'H': 'G',

'I': 'E',

'J': 'G',

'M': 'J',

'N': 'H',

'O': 'M',

'Z': 'C'}

And the cost of travelling to the goal can be found in the cost_so_far dictionary

print(cost_so_far)

{'A': 0,

'B': 1,

'C': 3,

'D': 4,

'E': 4,

'F': 5,

'G': 6,

'H': 9,

'I': 6,

'J': 9,

'M': 13,

'N': 11,

'O': 14,

'Z': 13}

So, using the chosen heuristic, the path is A->B->C->Z, and we can see this by reading the came_from dictionary in reverse, from goal to start.

Example

Goal-Oriented Action Planning (GOAP) is a popular technique used in game development and artificial intelligence to create intelligent behaviors for characters or agents in a game world. GOAP involves selecting a sequence of actions to achieve a specific goal. Each action has preconditions that must be satisfied for it to be executed and effects that modify the world state when the action is completed.

In this C# example, we’ll create a simple representation of GOAP with a few actions and a basic agent. Let’s consider an agent that needs to collect resources and build a structure.

using System;

using System.Collections.Generic;

// Action class representing a basic action in the GOAP system

public class Action

{

public string Name { get; }

public Dictionary<string, bool> Preconditions { get; }

public Dictionary<string, bool> Effects { get; }

public Action(string name, Dictionary<string, bool> preconditions, Dictionary<string, bool> effects)

{

Name = name;

Preconditions = preconditions;

Effects = effects;

}

}

// Agent class representing an agent using Goal-Oriented Action Planning

public class Agent

{

public string Name { get; }

public Dictionary<string, bool> WorldState { get; set; }

public Queue<Action> Plan { get; set; }

public Agent(string name)

{

Name = name;

WorldState = new Dictionary<string, bool>();

Plan = new Queue<Action>();

}

// Method to set the agent's current world state

public void SetWorldState(Dictionary<string, bool> worldState)

{

WorldState = new Dictionary<string, bool>(worldState);

}

// Method to create a plan to achieve a goal using GOAP

public void CreatePlan(Dictionary<string, bool> goalState, List<Action> availableActions)

{

Plan.Clear();

// TODO: Implement the GOAP algorithm to create a plan to achieve the goal

// (This implementation is simplified for demonstration purposes)

foreach (var action in availableActions)

{

if (IsActionValid(action) && HasRequiredEffects(action, goalState))

{

Plan.Enqueue(action);

}

}

}

// Method to check if an action is valid based on preconditions

private bool IsActionValid(Action action)

{

foreach (var precondition in action.Preconditions)

{

if (!WorldState.ContainsKey(precondition.Key) || WorldState[precondition.Key] != precondition.Value)

{

return false;

}

}

return true;

}

// Method to check if an action contains the required effects to achieve the goal

private bool HasRequiredEffects(Action action, Dictionary<string, bool> goalState)

{

foreach (var effect in goalState)

{

if (!action.Effects.ContainsKey(effect.Key) || action.Effects[effect.Key] != effect.Value)

{

return false;

}

}

return true;

}

// Method to execute the plan and update the world state

public void ExecutePlan()

{

while (Plan.Count > 0)

{

Action action = Plan.Dequeue();

Console.WriteLine($"{Name} executes action: {action.Name}");

ApplyEffects(action.Effects);

}

}

// Method to apply the effects of an action to the world state

private void ApplyEffects(Dictionary<string, bool> effects)

{

foreach (var effect in effects)

{

WorldState[effect.Key] = effect.Value;

}

}

}

public class Program

{

public static void Main()

{

// Define available actions

var gatherResources = new Action(

"Gather Resources",

new Dictionary<string, bool>(),

new Dictionary<string, bool> { { "HasResources", true } }

);

var buildStructure = new Action(

"Build Structure",

new Dictionary<string, bool> { { "HasResources", true } },

new Dictionary<string, bool> { { "HasStructure", true }, { "HasResources", false } }

);

// Create the agent

var agent = new Agent("Agent 1");

// Set the agent's initial world state

var initialWorldState = new Dictionary<string, bool> { { "HasResources", false }, { "HasStructure", false } };

agent.SetWorldState(initialWorldState);

// Define the goal state

var goalState = new Dictionary<string, bool> { { "HasStructure", true } };

// Create a plan to achieve the goal

agent.CreatePlan(goalState, new List<Action> { gatherResources, buildStructure });

// Execute the plan

agent.ExecutePlan();

// Print the final world state

Console.WriteLine("Final World State:");

foreach (var kvp in agent.WorldState)

{

Console.WriteLine($"{kvp.Key}: {kvp.Value}");

}

}

}

Please note that this implementation of GOAP is simplified for demonstration purposes and might not be suitable for complex real-world scenarios. In a real game or application, you would need to implement a more sophisticated version of the GOAP algorithm and consider factors like cost, time, and more complex world state representations. Nevertheless, this example provides a basic understanding of how GOAP works in a simple context.

More complex example

In this more complex C# example, we’ll enhance the GOAP implementation to include time, cost, and state changes with a real-world scenario. Let’s consider an agent navigating through a grid-based world to collect resources and construct a building, taking into account the passage of time and the cost associated with actions.

using System;

using System.Collections.Generic;

// Action class representing a basic action in the GOAP system

public class Action

{

public string Name { get; }

public Dictionary<string, bool> Preconditions { get; }

public Dictionary<string, bool> Effects { get; }

public float Cost { get; }

public Action(string name, Dictionary<string, bool> preconditions, Dictionary<string, bool> effects, float cost)

{

Name = name;

Preconditions = preconditions;

Effects = effects;

Cost = cost;

}

}

// Agent class representing an agent using Goal-Oriented Action Planning

public class Agent

{

public string Name { get; }

public Dictionary<string, bool> WorldState { get; set; }

public Queue<Action> Plan { get; set; }

public float Time { get; set; }

public Agent(string name)

{

Name = name;

WorldState = new Dictionary<string, bool>();

Plan = new Queue<Action>();

Time = 0f;

}

// Method to set the agent's current world state

public void SetWorldState(Dictionary<string, bool> worldState)

{

WorldState = new Dictionary<string, bool>(worldState);

}

// Method to create a plan to achieve a goal using GOAP

public void CreatePlan(Dictionary<string, bool> goalState, List<Action> availableActions)

{

Plan.Clear();

float currentTime = Time;

Dictionary<string, bool> currentWorldState = new Dictionary<string, bool>(WorldState);

// TODO: Implement the GOAP algorithm to create a plan to achieve the goal

// (This implementation is simplified for demonstration purposes)

// You may want to use more advanced pathfinding and planning algorithms in a real game.

// For this example, let's just add actions without considering their cost or time requirements.

foreach (var action in availableActions)

{

if (IsActionValid(action, currentWorldState) && HasRequiredEffects(action, goalState))

{

Plan.Enqueue(action);

UpdateWorldState(currentWorldState, action.Effects);

currentTime += action.Cost;

}

}

Time = currentTime;

}

// Method to check if an action is valid based on preconditions

private bool IsActionValid(Action action, Dictionary<string, bool> worldState)

{

foreach (var precondition in action.Preconditions)

{

if (!worldState.ContainsKey(precondition.Key) || worldState[precondition.Key] != precondition.Value)

{

return false;

}

}

return true;

}

// Method to check if an action contains the required effects to achieve the goal

private bool HasRequiredEffects(Action action, Dictionary<string, bool> goalState)

{

foreach (var effect in goalState)

{

if (!action.Effects.ContainsKey(effect.Key) || action.Effects[effect.Key] != effect.Value)

{

return false;

}

}

return true;

}

// Method to apply the effects of an action to the world state

private void UpdateWorldState(Dictionary<string, bool> worldState, Dictionary<string, bool> effects)

{

foreach (var effect in effects)

{

worldState[effect.Key] = effect.Value;

}

}

// Method to execute the plan and update the world state and time

public void ExecutePlan()

{

while (Plan.Count > 0)

{

Action action = Plan.Dequeue();

Console.WriteLine($"{Name} executes action: {action.Name} (Time: {Time})");

UpdateWorldState(WorldState, action.Effects);

Time += action.Cost;

}

}

}

public class Program

{

public static void Main()

{

// Define available actions

var gatherResources = new Action(

"Gather Resources",

new Dictionary<string, bool>(),

new Dictionary<string, bool> { { "HasResources", true } },

2f // Cost to gather resources

);

var buildStructure = new Action(

"Build Structure",

new Dictionary<string, bool> { { "HasResources", true } },

new Dictionary<string, bool> { { "HasStructure", true }, { "HasResources", false } },

5f // Cost to build a structure

);

// Create the agent

var agent = new Agent("Agent 1");

// Set the agent's initial world state

var initialWorldState = new Dictionary<string, bool> { { "HasResources", false }, { "HasStructure", false } };

agent.SetWorldState(initialWorldState);

// Define the goal state

var goalState = new Dictionary<string, bool> { { "HasStructure", true } };

// Create a plan to achieve the goal

agent.CreatePlan(goalState, new List<Action> { gatherResources, buildStructure });

// Execute the plan

agent.ExecutePlan();

// Print the final world state and time

Console.WriteLine("Final World State:");

foreach (var kvp in agent.WorldState)

{

Console.WriteLine($"{kvp.Key}: {kvp.Value}");

}

Console.WriteLine($"Final Time: {agent.Time}");

}

}

In this example, we added a Time property to the Agent class to represent the passage of time. Each action now has an associated Cost to execute it, and the agent keeps track of the time and updates it accordingly while executing the plan. The implementation of GOAP in this example is still simplified, and for a real-world scenario, you’d likely want to use more advanced pathfinding and planning algorithms. However, this example should give you a sense of how GOAP can be expanded to handle time, cost, and state changes in a more complex environment.